Deeply Immersive Audio Cues with Project Acoustics

In this series, we learned how Spatial Sound introduces a game-changing capability for developers to easily incorporate realistic and accurate 3D sound cues into games. Allowing gamers to listen to the three-dimensional space and objects around them with a full range of directional cues means, for example, that players in a first-person shooter game can genuinely feel planes flying above and battles raging below.

But what about footsteps in a narrow hallway or bullets whizzing behind a door? Spatial audio can handle directional cues, but how do we make the sounds respond to the environment and obstacles in the virtual world? This is where Project Acoustics offers cutting edge technology in audio for games.

Project Acoustics is a physics-based wave acoustics engine that uses your 3D geometry to automatically generate the sound parameters needed to create realistic audible spaces in your game. It gives life to 3D environments by accurately modeling the effect of sound waves bouncing off walls and floors and the echoing reverb of open space. Combined with Spatial Sound, Project Acoustics brings a whole new level of immersion and realism. Plus, it integrates right into game engines such as Unity and Unreal.

Project Acoustics

Microsoft’s Project Acoustics is made available to game developers to simulate realistic audio environments. It is designed for low compute and memory overhead to scale to games on desktop, console, and mobile. Built on Microsoft Research technology called Project Triton, it works much like static light baking for complex scenes. Project Acoustics bakes audio maps by analyzing and precomputing how sound waves propagate through game geometry.

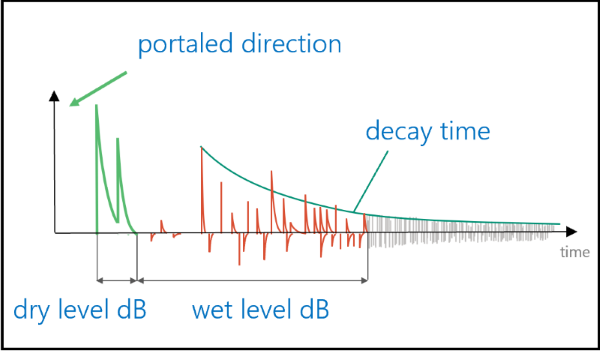

The analysis generates impulse responses to calculate perceptual parameters from sound emitters to listener or “probe” points that describe the acoustics of their location. The perceptual parameters include information such as arrival direction, wet and dry values of reverberation, length of reverberation and decay time, and level of obstruction or occlusion.

These values can then be applied at runtime to digital signal processing (DSP) to create an accurate representation of how sound is heard at any location within the geometry. You can use the parameters to guide your game’s sound design by applying different filters to the DSP in the audio engine. Plus, you can combine it with the Spatial Sound API for a fully immersive audio experience.

Workflow and Integration

Because it is very compute-heavy, Project Acoustics wraps the baking step of the sound wave simulation into a cloud process on Azure and returns the data to use at runtime. While it is possible to bake the acoustics on your PC, it can take hours or even days to process a scene locally and is likely only feasible for small scenes.

Through the plugins available for Unity and Unreal game engines, the steps to integrate advanced acoustics are straight-forward and easy.

The general workflow is as follows:

- Pre-bake: Mark acoustic geometry, assign acoustic materials, and define navigation areas for listener sampling.

- Bake: Review the calculated acoustic voxels and submit them to Azure to bake, getting back an acoustics game asset.

- Run: Load the asset and listen to the acoustics in your scene.

For detailed steps on setting up Project Acoustics in your game engine, take a look at the Unity Tutorial or Unreal/Wwise Tutorial and how to Create an Azure Account.

The Azure service simulates and analyzes the uploaded scene geometry with the listener points and creates a data set of perceptual parameters.

Depending on your cloud compute node type, the time and cost to complete the bake may vary. For example, if the estimated time for a complex scene running on a standard F8 node is approximately 4 hours, it may cost about $1.60 (based on current costs).

To reduce the simulation time, you can also choose to lower the accuracy of the simulation by selecting between coarse vs fine resolution.

These perceptual parameter data sets are flexible and you can decide to only use the data elements that are relevant to your game design. You can also load multiple data sets or stream the data with just the parameters you need for the player. This can make for a smaller amount of data to handle at runtime and save precious resources during gameplay.

The generated data is also designable, which means the parameters can be manipulated to exaggerate or reduce the effect as you wish. So if you wanted to emphasize footsteps and eerie noises to create a spooky atmosphere or make them audible from further away, it is possible to adjust and iterate on your sound design at runtime.

In-Game Examples

The Gears of War franchise integrated Project Acoustics along with Spatial Sound in Gears of War 4 and 5 to create realistic reverbs and echoes that change with the player's position and size of space. They were the first studio to use Project Acoustics for advanced acoustics on specific sounds in Gears of War 4. Then they were the first to distinguish between indoor and outdoor spaces in Gears of War 5, streaming the data set throughout the game.

In another instance, Sea of Thieves developers used Project Acoustics on different geometric areas of the game—such as between outdoor islands or below the ship’s deck—using multiple data sets. This use of Project Acoustics was focused exclusively on sound obstruction and occlusion.

Summary

Project Acoustics provides cues that are not only immersive for players but also give them a sense of the world around them. High-quality acoustics gives an understanding of how sound is traveling through or obstructed by the world. It can even have an impact on the accessibility of applications.

And as spatial positioning gives players directional and location understanding of audio, Spatial Sound and Project Acoustics together enable you to provide the highest level of immersion and realism in console, desktop, mobile, and VR/AR experiences. Acoustic perceptual parameters are automatically generated offline for you in Project Acoustics, offering a resource-efficient solution to creating authentic sound environments. The data is highly flexible, allowing for continued customization to fit your game design and development needs. Spatial Sound’s API makes it easy to use, in all games, not only AAA titles.

Suggested Resources

The best place to learn more about Project Acoustics is the Microsoft documentation page on Project Acoustics. Or you can watch the 2021 Project Acoustics Game Stack Live talk.

To get started with Project Acoustics, you can visit:

And finally, be sure to check out these Frequently Asked Questions for any questions you may have about the technology and how to use it effectively in your game.