Ori and the Will of the Wisps - Part 3

Monitoring

In the first two installments of this series I talked a bit about some of the whys, whats, and hows, let’s look at how it all came together. Naturally throughout the entire project we needed to be able to monitor the audio to ensure that our work was translating well in all of the common formats and devices. Between myself and my two audio leads, we were constantly going back and forth between 7.1.4 Atmos speakers, stereo speakers, and headphones (with and without spatial virtualization). Additionally, during the mixing stage of development, I ran compatibility testing in 7.1, 5.1, and stereo in various professional and consumer environments.

Overall, the translation of the mix into different configurations was excellent and we did not need to make many changes or compromises. The only thing that did come up a few times was related to panning spread when comparing speakers or virtualized spatial renders to regular non-spatialized stereo headphones. Because of natural crosstalk in acoustic spaces, whether virtual or physical, our left/right panning sounded a little too extreme when monitored in headphones without virtualization. We ended up finding a nice middle-ground for our panning that still provided good directionality on speakers or virtualized, but without sounding too hard-panned over stereo headphones.

Mix goals and tools

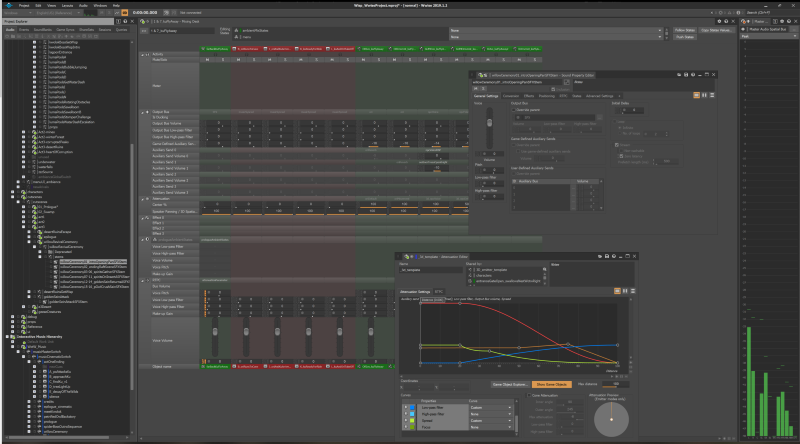

As with most game projects, while there was a mix phase near the end of the development cycle, the bulk of the mix work started early in the development process and continued every step of the way as the game came together. As systems came online or content was implemented, we were always mindful of how it impacted the soundscape and the relative mix. Early in the process we established some loudness guidelines for classes of content such as music, character sounds, environmental audio, cinematics, etc. Using these as a rough skeleton of the mix, we created busses and structures within Wwise to dynamically adjust the mix according to what was happening in the game, both sonically as well as design or gamestate driven.

I wanted the game to sound very dynamic, with the big stuff hitting hard and the environment to be enveloping but not intrusive. It all needed to work with the amazing music being a central component of the mix. Because we were using every tool and trick for populating content into the spatial landscape, the mix phase was spent almost exclusively tweaking and manipulating 3D attenuations, emitter locations on game objects, and bus dynamics. The goal was to find a comfortable and natural space for each element with as little need for brute-force compression and EQ just to make things fit.

Because the music was composed and mixed in stereo, it needed to be up front in the left/right mains. Mixing for spatial platforms allowed me to use so much more of the room to work around the music without it sounding oddly placed or unnatural. Just a little bit of positional spread helps items be heard clearly. Remarkably, the downmix whether via Dolby Atmos renderer or Wwise’s internal routing still sounded great and felt like they made the right “decisions” to preserve the aesthetic intent of what I was trying to do.

Final thoughts

I’m really happy that we were able to create such a rich and immersive audio presentation for Ori and the Will of the Wisps, we’re really proud of how everything came together in the end. The combination of the target platforms and the development tools made it essentially frictionless to be able to jump right in to design and mix in Dolby Atmos from the very beginning.

---

Award-winning sound veteran Kristoffer Larson joined Formosa Interactive in the summer of 2016. With over two decades of sound experience, including post, games, and VR/AR, Larson truly understands the needs of developers. He’s happiest when a project is collaborative across all levels, from concept to integration. Recent projects with Formosa include Ori and the Will of the Wisps, Marvel Strike Force, State of Decay 2, and Mission: ISS.

Prior to joining Formosa, Larson founded his own company, Tension Studios, based in Seattle, Washington. After having spent years in various internal audio roles with companies like Cranky Pants Games, Konami, WB Games and Dolby Labs, his goal was to create a game-centric audio studio that specifically catered to interactive development. His unique approach and experience can be heard on Tiny Bubbles, Fable: The Journey, State of Decay and Halo 4.