Dolby Atmos Studio Configuration

Introduction

This documentation provides information about creating immersive audio content to be delivered via Dolby Atmos output, including setting up a monitoring environment.

About this documentation

This documentation is for a game audio developer who is creating and mixing game audio for Dolby Atmos. It provides an overview of Dolby Atmos game audio, describes setting up a monitoring environment, provides recommendations for content generation and mixing, and lists some resources.

Dolby Atmos

Dolby Atmos is a suite of tools and technology for the creation, transportation, and reproduction of sound in all axes of three-dimensional space.

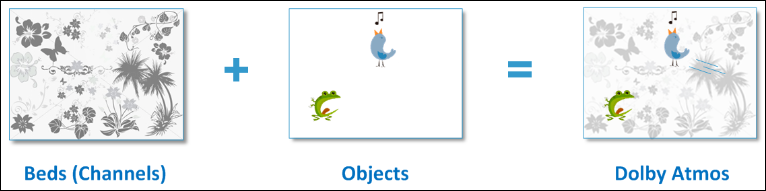

Audio is created and transmitted as objects, instead of traditional channels. Audio is still delivered via current protocols and standards (HDMI, Dolby TrueHD, and Dolby Digital Plus).

With the addition of height, Dolby Atmos unlocks the ability to pan audio fully in three-dimensional space (x,y, and z axes). The Dolby Atmos format is an object-based format, which means sound sources are essentially packets of audio data combined with coordinate information along with other metadata, including size.

Because the location metadata is relative and not absolute, the positional reproduction (and resolution) of the audio is scaled and limited only by the Dolby Atmos rendering device (typically, an audio/video receiver (AVR)). That means that a content creator can author a single Dolby Atmos mix and expect it to be rendered in the most accurate way possible for a given speaker configuration (from headphones to a 34-speaker home theater system).

Although the use of audio objects provides desired control for discrete effects, other aspects of a game soundtrack also work effectively in a channel-based environment. For example, many ambient effects or reverberations actually benefit from being fed to arrays of loudspeakers. Although these could be treated as objects with sufficient width to fill an array, it is beneficial to retain some channel-based functionality. Dolby Atmos therefore supports beds in addition to audio objects. Beds are effectively channel-based submixes or stems. These beds can be created in different channel-based configurations, such as 5.1, 7.1, or 9.1 (including arrays of overhead loudspeakers).

Content creation environment

A game audio studio must ensure that the systems it uses for playback of interactive and linear content can support the addition of monitor channels needed for Dolby Atmos.

- Development of interactive and linear content

- Configurations for monitoring a linear Dolby Atmos mix

- Print mastering

- In-game monitoring

- Premixing

Development of interactive and linear content

There are fundamental differences between the development of audio for interactive experiences and traditional linear playback.

Linear media has a mastering stage near the very end of the creation process where the mix is considered final and is immutably frozen. Games, however, are essentially playing back a live audio mix at run time. The game audio engine in essence is a very complex yet efficient digital audio workstation that is using myriad rules and data sources to play back a collection of individual assets to create a mix that only becomes cohesive as it is delivered to the end user. This creates a need to configure monitoring environments to support not only a traditional control-room signal flow, but also decoded signals from a game console or PC.

Game audio studios have been addressing configurations for interactive and linear content successfully for a long time now, with combinations of multichannel input and output devices along with routing infrastructure, hardware decoders, and consumer AVRs. The introduction of Dolby Atmos technology into the monitoring environment does not fundamentally change these approaches, but it is necessary to examine each stage of the studio signal flow to ensure that the systems can support the possible addition of monitor channels. We will examine individually the signal and workflow of both the content creation of assets using a digital audio workstation as well as the monitoring of decoded Dolby Atmos signals from a game system.

Configurations for monitoring a linear Dolby Atmos mix

When creating a linear Dolby Atmos mix, you listen to mono and stereo assets as well as a Dolby Atmos render from a Pro Tools environment.

Even if a game is going to be presented in Dolby Atmos, the vast majority of assets and sound sources that are created are mono or stereo. There are only a couple content creation scenarios where you monitor a Dolby Atmos render from a Pro Tools environment:

- Mixing linear content intended to be played back as a full Dolby Atmos mix (cinematics, music, ambience beds)

- Mixing linear content for trailers or promotional material intended to be distributed or displayed via Blu-ray Disc or a streaming media service that supports Dolby Atmos

Dolby Atmos content creation affects the signal flow of a control room environment from the speakers all the way to the digital audio workstation.

Monitors

Your control room should include a set of similar monitors set up in a 7.1.4 speaker layout for producing content for play back in a home theater environment.

The 7.1.4 speaker layout provides enough positional resolution that a designer can be confident that a mix will accurately translate to systems of larger or lesser speaker count.

Note: In describing a Dolby Atmos speaker configuration, the number of channels used to reproduce sounds in the height plane is enumerated by adding an extra digit to the channel nomenclature. For example, a 5.1.2 system is a traditional layout of five speakers on the horizontal plane (left, center, right, left surround, and right surround) plus one subwoofer, and two speakers in the height plane.

Ideally, all monitors (with the exception of the subwoofer) should be of the same make and model to avoid any timbral shift due to unmatched frequency responses. If this is not possible due to budget or logistics, some monitor manufacturers produce models that are designed to match as close as possible the main models but in a form factor that is easier for ceiling mounting, either flush or on the surface.

Note: Dolby Atmos enabled speakers do not work in a control room. For consumers, Dolby Atmos enabled speakers are a convenient option for height channels. These speakers take the place of ceiling-mounted speakers by using a combination of cabinet design, filters, and DSP in the Dolby Atmos renderer to reflect sound off of the ceiling back down to the listening area. Most consumers prefer the more diffuse sound that is produced by the Dolby Atmos enabled speakers, but this soft imaging does not provide the resolution that a content creator or mixer needs. Also, most control rooms feature acoustic treatment on the ceiling designed to diffuse and/or absorb reflections, which naturally reduces the effectiveness of a sound-reflecting speaker. Additionally, for any linear content creation or mixing in Dolby Atmos, neither the Dolby local renderer nor the Dolby Rendering and Mastering Unit (RMU) supports Dolby Atmos enabled speakers. It is common for mixing facilities to have a listening room set up as a typical home theater environment using Dolby Atmos enabled speakers in order to listen to how a mix translates on a typical consumer system.

Contact your Dolby Games representative for a detailed recommendation of monitor specifications and placement in a small control room environment.

Monitor control and tuning

Monitor control, bass management, room correction, and time/frequency correction are essential for mixing and monitoring Dolby Atmos content. These tools may be present in more than one place in your environment. Make sure, however, that each aspect is addressed, but only once, and preferably in an easily defeatable manner in case you are switching between signal flows.

- Monitor control

Make sure that any monitor controller has enough inputs and outputs with the correct channel count to support your complete workflow and signal flow. Having instant access to individual speaker mutes and solos is very helpful when mixing and understanding exactly which speakers are in use for a given panning situation. - Bass management

Bass management is essential for redirecting the lower frequencies to the speakers that can handle them (in most cases, a dedicated subwoofer). One of the features of Dolby Atmos is that all of the surround speaker channels are full bandwidth, including the height channels. This allows for accurate movement of a sound through three-dimensional space without any timbre shift. - Correction and calibration

Compensation for room acoustics, delays for speaker position differences, and correction for individual speaker frequency response are all tools that can and should be applied if needed in order to maximize relative uniformity between all channels. Listening level calibration should be performed in order to align the individual monitor volumes and establish a listening environment conducive to creating a mix with good dynamic range. Volume, phase, frequency, and time alignment of audio is always important in a control room whenever you have more than one speaker, and to an even greater degree in a Dolby Atmos environment.

Digital audio workstation

The digital audio workstation (in this case, Avid Pro Tools), is essentially the brain of a Dolby Atmos playback environment. Use the Dolby software renderer plug-in to monitor Dolby Atmos content in real time.

The Dolby Atmos content creation tools are a suite of software tools for Pro Tools that includes the renderer plug-in. The software renderer knows the speakers available in a system (through configuration during setup of the renderer) and determines which speakers to use from moment to moment to re-create the sound the mixer intended. The renderer lets edit suites and smaller mix rooms work with a software-only solution for monitoring of Dolby Atmos content through standard Pro Tools hardware.

Linear Dolby Atmos content is created with the Dolby Atmos content creation tools, including a Pro Tools panner. Specific information regarding the installation, configuration, and use of the Dolby Atmos content creation tools can be found with the software installers.

Dolby has two Pro Tools loaner racks for developers who do not have a Pro Tools setup. Contact your Dolby Games representative for more information.

Print mastering

For linear content, Dolby Atmos content is delivered in a print master.

When a Dolby Atmos mix is ready to be output for delivery, a print master is created using a Dolby RMU. The bed and object audio data and associated metadata are recorded during the mastering session to create a print master. Dolby RMUs are available for purchase. Contact us for more information.

In-game monitoring

The typical way to monitor Dolby Atmos content is through the output of a game audio engine at run time, coming out of a PC or console via HDMI into an AVR.

The signal flow for monitoring in-game content is much more simple, because the AVR handles monitor control, bass management, correction, and calibration. An AVR can be integrated into a control room signal flow, usually as an alternative input into the monitor controller setup. Alternatively, an AVR can be combined with a set of consumer speakers to function as an independent monitoring chain.

Premixing

You can use the Dolby Atmos content creation tools to create and listen to a premix of linear content in a 5.1-channel equipped editing room.

We recommend using the Dolby Atmos Production Suite for premixing, available from the Avid store for $299.

Mixing and implementation recommendations

Dolby Atmos provides creative and aesthetic benefits that you can implement through content creation and mixing decisions.

- Three dimensions and elevation

- Resolution and object size

- Full-frequency surrounds

- Mix clarity

- Clarity of individual assets

- Objects, bed, and submixes

- In-game dynamic objects

- Emitter placement

- Binaural rendering

- Linear content

Three dimensions and elevation

The most immediately apparent feature of Dolby Atmos is the addition of height channels during playback, which allows for a mixer to place sounds anywhere in three-dimensional space around the listener.

Height channels open up a new dimension for panning and positioning audio, whether it is dynamically moving around or placed statically. It is worth noting that human hearing is more sensitive to changes in azimuth (horizontal plane) than elevation. This means that panning changes up and down may need to be a bit larger in order for a listener to perceive them compared to movement in the horizontal plane.

Resolution and object size

In addition to coordinate metadata to position an audio object precisely anywhere within the listening environment, an object also has a size parameter.

The size parameter increases or decreases the number of loudspeakers used to render a particular object. When rendering in Dolby Atmos, decorrelation is automatically applied across loudspeaker feeds. This increases the perceived image size and impression of envelopment and ambience.

Both position and size should be taken into account when placing and panning objects in order to find the right balance between sounding like a point source or a more diffuse zone. This balance can often change as the relationship changes between the listener perspective and the source. This should be a familiar concept for anyone working with three-dimensional game audio engines. This same concept and practice can be applied when mixing linear content with the Dolby Atmos Panner plug-in and automation within Pro Tools.

Full-frequency surrounds

In contrast with previous surround formats, every channel (except the Low-Frequency Effects channel) of a Dolby Atmos system can reproduce a full-frequency signal.

Reproducing a full-frequency signal is essential to the ability to pan an object anywhere in the listening space without any shift in timbre. Content creators and mixers should take advantage of this when placing and panning sounds. You provide a more immersive mix by placing sounds and sources more off screen, without concern of a limited frequency range. The ability to move music and ambient sounds out beyond the screen can create a larger, more enveloping image.

Mix clarity

The features of Dolby Atmos allow the mixer to provide more space in the soundstage, with less sonic competition between all of the sources.

Three-dimensional sound positioning, object size and resolution, and full-frequency surrounds allow for a generally more open and immersive mix to be possible. Because you have more positional options to place or distribute sound sources when mixing, you do not need to rely as heavily on aggressive EQ or dynamics DSP in order to get elements to fit in the mix. This is particularly beneficial for making room for sources that do need to be locked to the screen, improving intelligibility for dialogue, and clarity of onscreen sounds.

Clarity of individual assets

As a result of the improved clarity possible in a Dolby Atmos mix, the quality of individual assets becomes more apparent, for better or worse. Basically, it is not as easy to hide poor-quality sources or edits in the mix.

Because a Dolby Atmos mix can expand the audio scene beyond the screen out into the listening environment, sound sources can have an expanded life before and after they are featured on the screen. When you are mixing, you will need to think more about where sounds are going and coming from when they are dynamically panning sources on and off screen, and sound designers will need to be able to create enough content to tell that full story.

Objects, bed, and submixes

It is important to remember that any DSP or submixing on a sound source needs to be done before it is assigned and panned as a Dolby Atmos audio object.

Whether creating linear content or working with a game audio engine, the outputs of Dolby Atmos audio objects are routed directly to an encoder without another mix stage. The bed is an effective way to use traditional channel-based mixing methods in a Dolby Atmos environment. Diffuse, ambient sources, environmental DSP returns, and submixes can all be routed to a multichannel bus that is in turn assigned to the individual bed object inputs.

In-game dynamic objects

There are a few ways that a game developer can pan and position sources as Dolby Atmos audio objects during game play.

The most straightforward method to pan and position an object is to attach a sound emitter to a game object and pass the positional information directly to the game engine panner. Game objects could be visible onscreen objects that have an associated sound, or they could be invisible actors used solely for placing or animating sound sources.

Another method to pan and position an object is to create and use three-dimensional object panning tools within the audio engine that provide the coordinate and size metadata to be associated with the Dolby Atmos audio objects.

Emitter placement

Using Dolby Atmos, the exact position of a sound emitter is more apparent to the listener. This may require a game developer to provide more specific locations for sound components of a game object.

For example, a knight character that has a sword for a weapon may have all of its sounds emitting from that object’s root node, which is typically located either at ground level or waist level. In a traditional multichannel or stereo playback environment, the z axis of a sound emitter is not reproduced. In a two-dimensional playback environment, these sounds are naturally reproduced at the horizontal level of the speakers. A content creator may only need to adjust for the extra distance to the listener position.

In a Dolby Atmos playback environment, if these sounds are routed as audio objects, the listener would be able to hear that the footsteps are coming from the character’s waist, or that sword swings sound like they are coming from the floor. Best practice is to create nodes or bonesfor each unique sound emitter position (ground level for footsteps, sword tip for whooshes).

Binaural rendering

Panning and positioning accuracy can be even more important when monitoring through a Dolby Atmos binaural renderer, especially in virtual or augmented reality experiences.

The perception of proximity to the listener can be enhanced with binaural rendering, providing the ability to more specifically position sounds anywhere from a great distance to literally right next to the listener's ears. Additionally, binaural renderers can reproduce sounds so that they can appear to come from below the listener. This increased resolution adds an even greater importance to managing the exact location of sound emitters, whether they are placed individually or their positions are inherited from their associated game objects.

Linear content

Because there is no singular approach for delivering Dolby Atmos linear content, we recommend that you contact your Dolby Games representative to work with you to identify an approach that meets your particular needs.

The most common uses of linear content in games are for cinematics, music, and ambient sounds. How a game developer approaches delivering linear content is based on a number of factors, including technical implementation, resource limitations, and workflow preferences. In addition, the implementation of Dolby Atmos may differ depending on the audio engine that is being used.

Studio signal flow examples

The signal flow for your mixing studio depends on several things, including on the type of content you are creating and mixing.

Note:There are many different approaches to routing and monitoring that depend on the particular logistics or workflow of your facility; the following examples are generalized suggestions only. Contact your Dolby Games representative for recommendations and guidance for your specific studio needs.

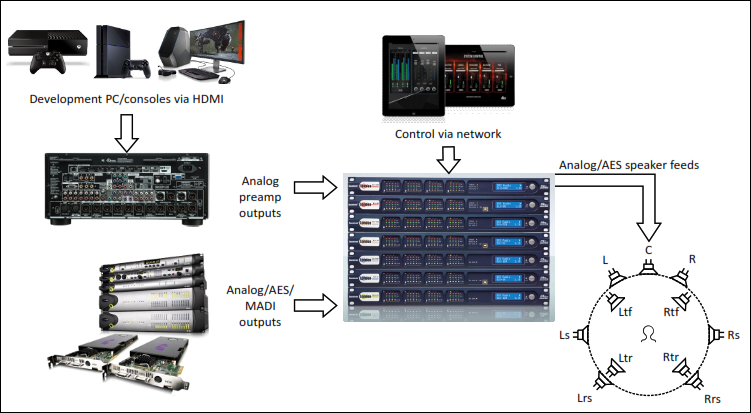

For monitoring Dolby Atmos for in-game and linear content creation and mixing, one example is to have two source paths that feed into an all-in-one routing matrix and monitor controller, which is connected directly to self-amplified monitors. One source path is from a consumer Dolby Atmos compatible AVR, which acts as a switcher to choose the desired console or PC that is outputting a Dolby Atmos signal via HDMI. The other source path is the output of Pro Tools, whether directly from the I/O unit or a mixing console.

Both of these source paths are postrenderer speaker feeds that are routed directly to their respective monitors via the router/monitor controller unit. There are a number of manufacturers that make audio distribution hardware that are essentially racks of digital signal processors that can be combined with I/O units to create a highly configurable routing and monitoring system. Using visual scripting in a computer application, you can program the DSP to handle all of the signal routing, correction EQ and speaker delays, bass management, and monitor control.

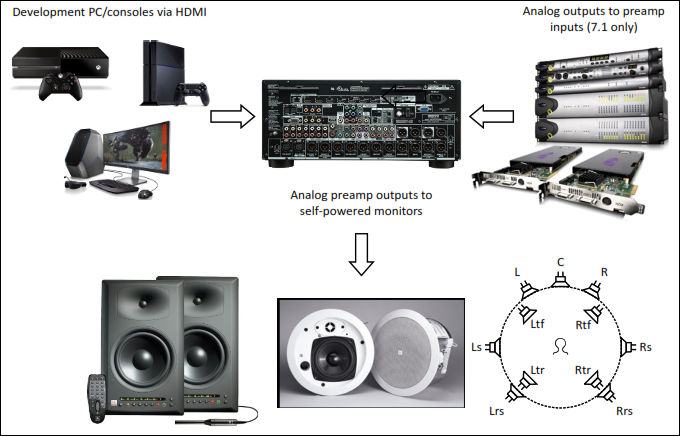

Another simpler example is to have a consumer source path and a digital audio workstation source path, and to use a Dolby Atmos compatible AVR as an all-in-one renderer and monitor controller.

Using an AVR that has +4 dBu line-level XLR outputs facilitates the integration of professional self powered monitors. It is important to note that this configuration supports monitoring of in-game Dolby Atmos content that has been rendered out of the AVR, but it would support only channel based linear content creation of a maximum channel count as dictated by the preamp input (typically, eight for a 7.1-channel signal). You could use Dolby Atmos Production Suite on the Pro Tools computer to monitor a Dolby Atmos premix, but it would have the overhead content rendered into the horizontal speakers. It is also worth noting that most multichannel preamp inputs do not route through the bass management circuit, which means that this should be handled elsewhere.

Dolby tools and resources

There are several Dolby tools, resources, and services that are available to game audio content creators and mixers.

- Dolby Games team

Dolby has a dedicated team of professionals to help answer any question you might have relating to developing game audio for Dolby Atmos. Services include consultation on studio setup, Dolby audio technology integration, third-party tools information, Dolby logo and trailer usage, and print mastering. For more information, contact us, or email games@dolby.com. - Game audio engines

Both of the leading game audio middleware developers support Dolby Atmos in their products, on compatible systems: - Wwise from Audiokinetic provides a Dolby Atmos panner plug-in for panning objects to a 7.1.4 output bus. See www.audiokinetic.com.

- FMOD Studio from Firelight Technologies features three-dimensional audio object events and bed objects that can output to a compatible three-dimensional audio API. See www.fmod.com.

- Dolby Atmos content creation tools

This is a suite of software tools and plug-ins that allow content creators and mixers to pan, position, and monitor a Dolby Atmos mix. Contact us for more information and access to these tools. - Dolby RMU

The Dolby RMU is used to create a Dolby Atmos print master, which is used in the process of encoding a Dolby Atmos soundtrack for distribution or playback. Linear Dolby Atmos assets to be played back with in-game content must be created through the mastering process. Contact us for more information about Dolby Atmos mastering facilities, to schedule access to one of our loaner Dolby RMU racks, or for inquiries into certifying your facility for Dolby Atmos mixing and mastering. - Dolby Media Producer Suite

This is a suite of tools for encoding and decoding audio (both for disc and streaming delivery). The Dolby Media Producer Suite is used to encode Dolby Atmos print masters for authoring Bluray Discs, ultra high definition, and over-the-top streaming. The Dolby Media Producer Suite is available for retail sale. For more information and a list of dealers, visit www.dolby.com and search for "Dolby Media Producer Suite." - Dolby Creative Services

Dolby offers room tuning and calibration services for facilities. Contact us for more information and to be directed to the appropriate field office.

Glossary

- AVR

Audio/video receiver. An audio amplifier and audio/video (A/V) switching combination device for a home theater. It contains inputs for all of the audio and video sources and outputs to one or more sets of speakers and one or more monitors or TVs. - HDMI

High-Definition Multimedia Interface. A high-speed, high-capacity format for transferring digital information and the specific hardware interface for the format. - Dolby RMU

Dolby Rendering and Mastering Unit.